QuickTip: Tanzu TKC/Guest Cluster: Error “Permission denied” when creating file with securityContext

This time, I’m not getting into much detail about specific details around this topic. Consider this as a quick write-up/FYI. If you’ve ever encountered this issue or worked with securityContext‘s in Kubernetes, you might know what I’m talking about.

Disclaimer: Before proceeding, please don’t see this is as an official response from VMware. You continue on your own risk.

Some context

When deploying Grafana via TKG Extensions on your TKG(m) or TKGS/TKC, you might have encountered some "Permission denied" issues. This error is described in kb.vmware.com/s/article/82108 pretty well.

This also affects some TKGs releases, for example TKGS 1.20.2.

Reproduction

For some testing, I’ve written a yaml (inspired by kubernetes docs) to reproduce this error. I’ve used following:

apiVersion: v1

kind: Pod

metadata:

name: fstypetest

spec:

securityContext:

runAsUser: 1000

runAsGroup: 3000

fsGroup: 2000

volumes:

- name: fstypetest-volume

persistentVolumeClaim:

claimName: fstypetest-volume-claim

containers:

- name: fstypetest-pod

image: busybox

command: [ "sh", "-c", "sleep 1h" ]

volumeMounts:

- name: fstypetest-volume

mountPath: /data

securityContext:

allowPrivilegeEscalation: false

---

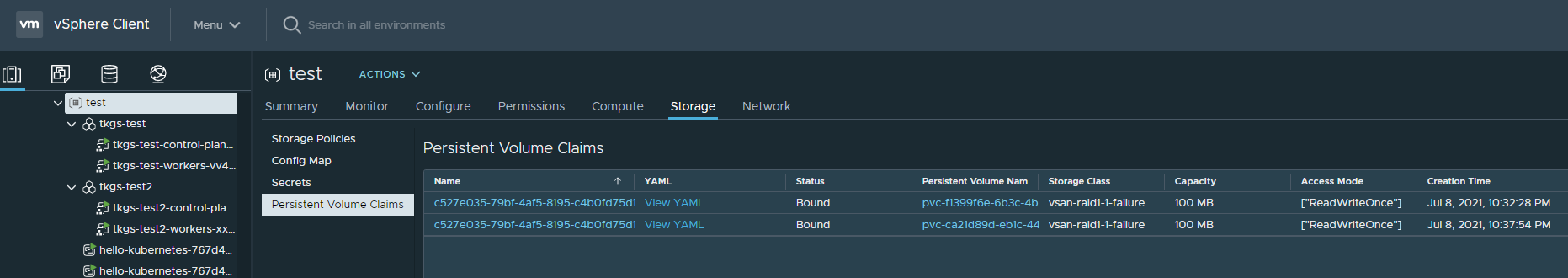

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: fstypetest-volume-claim

spec:

storageClassName: vsan-raid1-1-failure

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100MiThen get into the shell of this pod via kubectl exec -it fstypetest -- sh and you will see this:

/ $ ls -lsh /data

total 12K

12 drwx------ 2 root root 12.0K Jul 8 21:49 lost+found

/ $ ls -lsh /data^C

/ $ touch /data/test

touch: /data/test: Permission deniedHow you want it to look like

Now, when running the same specification on a GuestCluster with TKGS 1.20.7 you however will see this:

/data $ touch test

/data $ ls -lsh

total 0

0 -rw-r--r-- 1 1000 2000 0 Jul 8 21:22 test(Does look better, doesn’t it?)

So long story short: This issue seems to be finally solved in TKGS 1.20.7.

The solution

Updating your Tanzu Kubernetes Cluster/Guest Cluster to recently (5th of July 2021) released TKGS 1.20.7 does fix the issue finally.

$ kubectl get tanzukubernetesreleases | tail -n 5

v1.19.7---vmware.1-tkg.1.fc82c41 1.19.7+vmware.1-tkg.1.fc82c41 True True 20d [1.20.7+vmware.1-tkg.1.7fb9067 1.19.7+vmware.1-tkg.2.f52f85a]

v1.19.7---vmware.1-tkg.2.f52f85a 1.19.7+vmware.1-tkg.2.f52f85a True True 20d [1.20.7+vmware.1-tkg.1.7fb9067]

v1.20.2---vmware.1-tkg.1.1d4f79a 1.20.2+vmware.1-tkg.1.1d4f79a True True 20d [1.20.7+vmware.1-tkg.1.7fb9067]

v1.20.2---vmware.1-tkg.2.3e10706 1.20.2+vmware.1-tkg.2.3e10706 True True 20d [1.20.7+vmware.1-tkg.1.7fb9067]

v1.20.7---vmware.1-tkg.1.7fb9067 1.20.7+vmware.1-tkg.1.7fb9067 True True 3d2hThe workaround

When you can’t or don’t want to update, there are two workarounds.

Workaround #1

This workaround is inspired by the steps provided for TKG(m) here), so they should be fine. For TKGS, you can use following:

kubectl patch deployment -n vmware-system-csi vsphere-csi-controller --type=json -p='[{"op": "add", "path": "/spec/template/spec/containers/4/args/-", "value": "--default-fstype=ext4"}]'

kubectl delete pod -n vmware-system-csi -l app=vsphere-csi-controllerThis will patch your deployment named vsphere-cis-controller, then we remove all pods labeled with app=vsphere-cis-controller. As the deployment enforces multiple pods of the controller running at a given time, Kubernetes will start re-creating those deleted pods. This step is required to restart the pods, so that the deployment change takes effect.

However to note, this could result in some interruption when interacting with PVCs (e.g. during creationg/deletion) in a busy environment.

When issues appear, please consider raising an offical service request with VMware.

Workaround #2

The second workaround is the one I was using alternatively and should be less disruptive, as it’s going to modify Storage Classes we already have. However the caveat is, that all storage classes needs to be modified.

Check kubectl get sc for the names of your storage classes in use.

-

Export the Storage Class definition:

kubectl get sc vsan-raid1-1-failure -o yaml > tmp_sc.yaml -

Modify Storage Class by adding this below

parameters(watch out for indentation!)csi.storage.k8s.io/fstype: "ext4" -

Replace the Storage Class:

kubectl replace -f tmp_sc.yaml --force

The more automated way:

kubectl get sc vsan-raid1-1-failure -o yaml > tmp_sc.yaml

sed '/^parameters:.*/a\ \ csi.storage.k8s.io/fstype: "ext4"' -i tmp_sc.yaml

kubectl replace -f tmp_sc.yaml --forceBeside this, getting to the latest version for the final fix might be the best idea on a longer run. Hope this helps some people out there!

(Fun-Fact: I was writing this post as a solution for the current 1.20.2 release. During reproduction I figured out there’s 1.20.7 with a fix released.)